3 Things to know when moving to public cloud

The company has decided that the benefits of public cloud are too great to ignore any longer. It has been running infrastructure capably (mostly) in data centers and it’s now time to move. It’s certainly not the first to make this migration so it should be pretty simple right? Machines in the data center, machines in the cloud. Maybe VMware or HyperV has been leveraged in the data center so the obvious thought is… How different could it be? The debates about lift and shift vs. lift and improve, GCP vs. Azure vs. AWS, and who has the best Kubernetes implementation have been had, the resulting decisions have been made, and the migration has begun. What’s missing?

I’ve rebuilt entire data centers with thousands of hosts on cloud principles. I’ve designed and helped implement global data center management systems. I’ve helped companies move successfully from data center to cloud. Based on this experience, there are three things clients need to know about this move: The cloud has its own CMDB! The only thing that knows about a thing is itself! and The advantage of cloud is flexibility!

1. Say adiós to your CMDB

In the datacenter, the CMDB (configuration management database) exists to represent the desired (approximate) state of the environment. It is a valuable component for organizations following ITIL. Ultimately the actual state of your infrastructure should conform to your desired state in order to facilitate decision making about allocation of resources, workloads, etc.. In the cloud, maintaining an accurate representation of the state of the entire system is much more of a challenge for an external resource like a CMDB. Nodes are more likely to disappear, networks are more likely to partition, databases and caches fail over automatically, etc. Your best “source of truth” in the cloud is the cloud provider’s API. Running aws ec2 describe instances can often be one of the most repeated commands on an infrastructure team!

The cloud provider API is great for listing how many instances of a specific type are running, the size of attached disks, and the permissions between resources, but it cannot by itself answer which teams, or products, are using those resources. It’s common at the end of the month to have a large bill and to be left with the unenviable task of figuring out the sources of those costs. For this reason, tagging in your cloud provider is your CMDB! Tagging your resources should be done according to Infrastructure as Code (IaC) principles. Relying on a person tagging resources appropriately and uniformly via a web console’s interface is too prone to oversight and inconsistency.

At a minimum, each resource in your cloud environment should have tags for:

- Name

- Department/Project/Product

- Role

More tags can help, adding redundant information that can be determined by a lookup like memory for an instance is not necessary, but it’s essential to have a Project (or similar) tag designated as a “billing tag” if you’re working in AWS. These tags should also be applied widely, in order to ensure consistency across containers, Elasticache, RDS, Dynamo, AKS, CloudSQL, etc. Want to know how much of last month’s bill was consumed by Project MustBeTheMoney? With proper tagging in place, you can filter for that information. For this reason, resist the urge to use convoluted naming conventions requiring interpreting implicit logic to carry that information. Dozens of tags can be applied to resources, so it’s unnecessary to have humans memorize arcane translations to understand the purpose of a resource.

Most companies have difficulty maintaining a consistent view in slower moving environments like a data center. For this reason, it’s best to rely on the provider’s API. It is a core competency for the cloud providers and when we use their services, we can benefit from the fact that the only thing that knows about a thing is itself.

2. The only thing that knows about a thing is itself

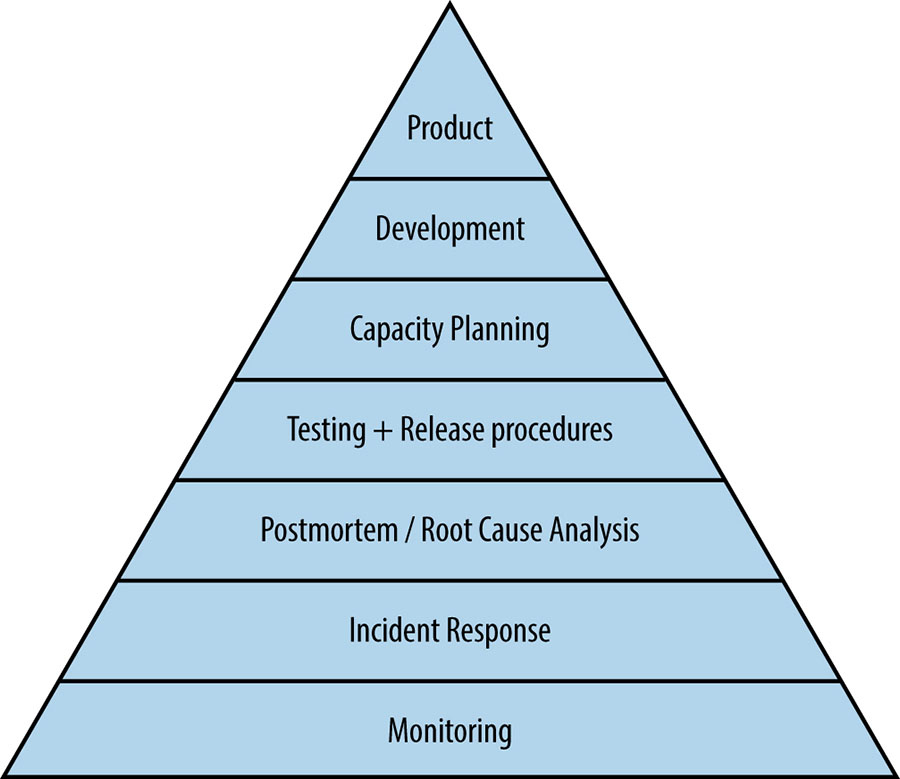

Part 3 of the Google SRE book has a Site Reliability Engineer’s (SRE) representation of Maslow’s hierarchy for running resilient infrastructure. It’s plain to see that monitoring is a foundational element (pun intended!) of delivering that service. In many respects, the data center implementation of infrastructure is more forgiving than the cloud implementation. For example, In the data center specialized hardware can be purchased for specific tasks and there is very low latency between nodes. In the cloud, the options are what the provider offers and latency is measured in milliseconds. In these situations, it’s imperative to be able to see what is happening, as it’s happening.

While understanding the state of our systems is important whether in the data center or cloud in order to run a resilient system, there are different patterns used in the cloud. Where specialized hardware can’t be used to scale up, or enable high availability, it’s necessary to have a bias toward horizontal scalability to achieve the same result. The most effective way to be able to manage all that infrastructure is to understand that the only thing that truly knows the state of a system is itself.

The monitoring system Prometheus was modeled after monitoring systems inside Google. Ever wondered why the Kubernetes API server has the /healthz (and now the /readyz and /livez) endpoints? It’s because the only thing that truly knows about a thing is itself! For Prometheus to understand the state of a system, it chooses to ask that system. When a load balancer needs to bring something in, or take something out of service, what does it use? The health endpoint. It’s not enough to rely on a CMDB to provide this anymore.

Instrumentation is critical in the cloud. Many of the things that were thought about infrastructure in data centers is no longer true in the cloud. There should be application and infrastructure toolkits so developers and SREs can easily monitor what Google calls the Four Golden Signals: latency, errors, saturation, and traffic. Even within those signals, in the cloud it’s important to be mindful of p95 and p99 values. Unfortunately it’s not uncommon to discover that some amount of users are having a terrible experience because of small variations in infrastructure that is spread across multiple datacenters (i.e. a properly deployed cloud infrastructure).

I typically recommend investing in a high performance, real-time monitoring implementation run by a professional monitoring company over that offered by the cloud providers. At one company I was able to use this instrumentation to match saturation characteristics of different workloads to different hardware that was available and was able to save thousands of dollars a month. This demonstrates the flexibility of cloud to save costs.

3. Flexibility

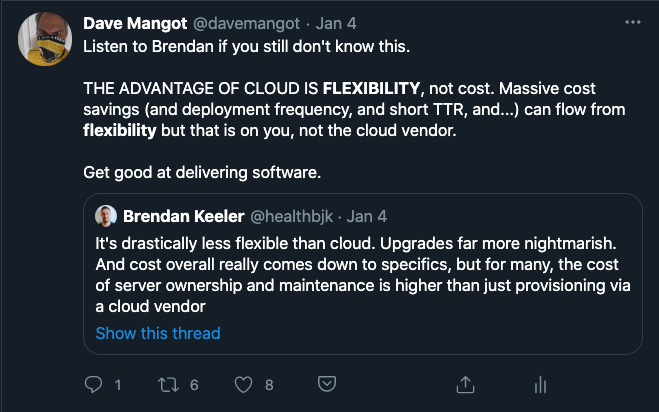

For whatever reason, the most common questions I’ve had over the years have been trying to compare the costs of data center vs. cloud. I’ve been shown spreadsheets with servers, bandwidth, disks, etc. All the discussions are well meaning, but Monday isn’t Wednesday, and public cloud is not just someone else’s data center.

The advantage of cloud is flexibility, not cost. As we’ve discussed above, in the cloud we scale horizontally. In the cloud, we can match workloads to infrastructure. In the cloud we also don’t have to pay for resources that are not being used. In the data center if I want to be able to migrate workloads between infrastructure, I need to own all that infrastructure. If I want to do the same in the cloud, I have the flexibility to spin up new infrastructure on demand, and retire that which I’m no longer using.

I’ve worked with companies using this method that replaced their generic storage subsystems with one’s they’d developed themselves using standard AWS hardware and were able to reduce their largest cost (storage), by 75%. I’ve worked with companies that were able to reduce the Time to Recover (TTR) of their primary database systems from 24 - 48 hours down to 10 minutes, all while making the process simpler, better understood, and more flexible in the process. Not because AWS offered them a solution that was competitive with something offered in the datacenter, but because AWS offered the flexibility to assemble components like EBS snapshots that they did not own, in ways that allowed them to achieve stunning results.

Set Sail

If the company you work for is joining in the move to cloud, it’s an exciting time, congratulations! All 3 of the major cloud providers have incredible offerings that allow an organization to take advantage of the flexibility that comes from being able to run rapid experiments to determine the optimal configurations for their specific problems.

If those organizations make sure they have solid instrumentation so they have excellent visibility into what is happening in those experiments, and leave rigid sources of truth behind, the future is bright for a cheaper, faster, agile path to achieving their business goals.