Respect for Production

Part of letting go of the old ways of doing things requires being a little bit uncomfortable. Challenging your own long-held beliefs is not easy. When I’m working with Operations teams who are used to working in a data center, sometimes this can be an uncomfortable transition.

A data center is relatively stable:

- Machines don’t suddenly disappear (even with VMware)

- Hosts live for a long time, because you’ve made the hardware investment

- Once you’ve configured a host, other than patches/upgrades/deployments, it doesn’t really change

- Security is generally a hard candy shell and a soft gooey center

There are a ton of fancy vendor data center solutions to choose from from companies like VMware, F5, Palo Alto, Pure Storage, etc. Operations teams come to believe that the value that they bring to an organization is that they know how to configure things that are hard to configure.

When these teams move to the cloud, they carry on with the same tasks, sometimes even bringing the above vendors with them. What vendor is going to turn down cloud revenue?!

When they log onto a host and change things, I tell them that they don’t have Respect for Production. As you can imagine, this ruffles some feathers. They protest about how careful they were, or how they followed all the change management procedures and worked the ticket, it was approved!

That’s besides the point. If you truly respected production, you would never log onto a server. In the cloud, if you’re doing it right, there should never be a reason to log onto a host. Machines should configure machines. Machines should perform deployments. Logs and metrics should be sent to logs and metrics services.

It can be using traditional configuration management, GitOps for Kubernetes, or whatever. After you’ve successfully automated all aspects of creating and destroying a server, just the mere thought of hand configuring a server feels dirty. If you want portfolio companies to go fast (and with high quality), configuring machines with code is table stakes, and then we can do better.

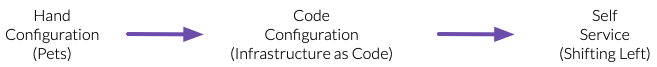

It’s one thing to move from hand configuration to running consistent code every time, it’s even better when you trust your code enough to let developers (gasp!) run it through self-service tools that enable the team to shift left (make it safe and easy).

Autoupdate

Respect for Production means that we tightly control (through code) what is allowed to run on and configure our servers. However, almost every DevOps tool vendor out there promises to keep their software up to date on your servers with their autoupdate tool. This is actually a Respect for Production violation!

Yes their software is well tested and is different than self-service. It’s pretty much no service! But autoupdate doesn’t show Respect for Production. If we’re tightly controlling how our servers are configured, autoupdate is a nightmare. On your phone, fine. In your home network, no problem.

In professional engineering nothing changes on our servers unless we can make guarantees about those changes. Not some well-intentioned engineer at a vendor at some random time pushing an update. This also goes for software. Developers should pull their libraries and artifacts from internal repositories, not from the Internet. Without this tight control, everything becomes a guess as to what’s out there. Could we create it again? With this tight control, we can reliably recreate anything we want, in minutes.

Not knowing what’s out there lessens the value of your testing, destroys your velocity, and leads to less reliability. Does any company want to deliver less than the competition?

You can do it!

CTOs often tell me that their Ops folks are too old school to learn to do Infrastructure as Code, and then the team does it. It’s not hard. Generative AI is very useful here. It doesn’t have to be the best code in the world.

Kids, amateurs, and inexperienced folks write code. They just don’t have Respect for Production.